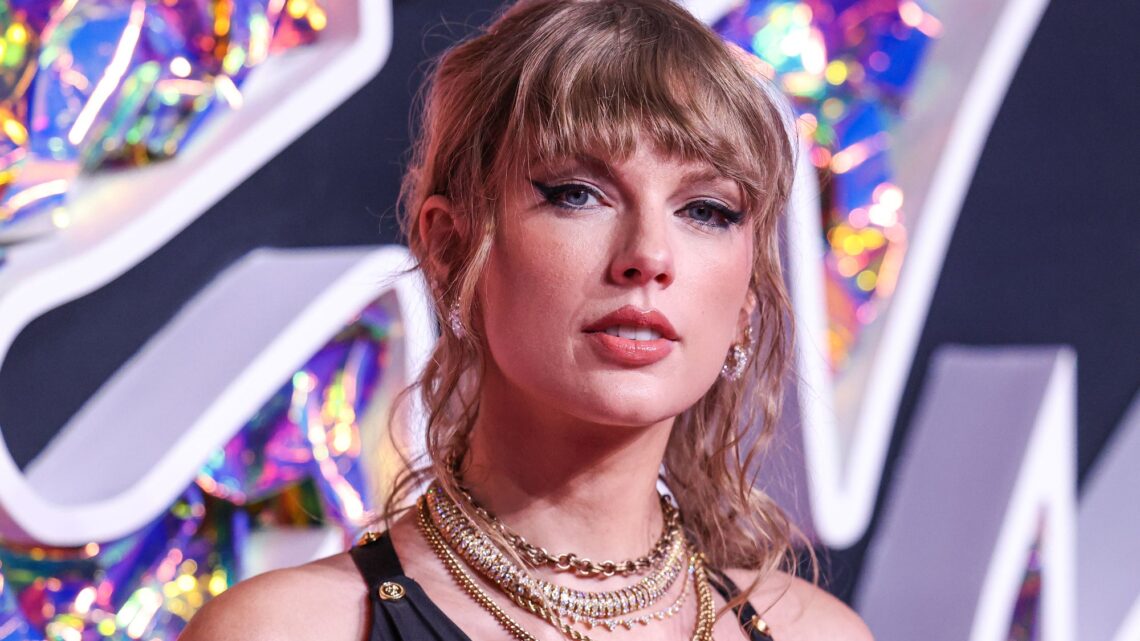

Nonconsensual, AI-generated images and video appearing to show singer Taylor Swift engaged in sex acts flooded X, the site formerly known as Twitter, last week, with one post reportedly viewed 45 million times before it was taken down. The deluge of AI generated “deepfake” porn persisted for days, and only slowed down after X briefly banned search results for the singer’s name on the platform entirely. Now, lawmakers, advocates, and Swift fans are using the content moderation failure to fuel calls for new laws that clearly criminalize the spread of AI-generated, deepfakes sexual in nature online.

How did the Taylor Swift deepfakes spread?

Many of the AI-generated Swift deepfakes reportedly originated on the notoriously misogynistic message board 4chan and a handful of relatively obscure private Telegram channels. Last week, some of those made the jump to X where they quickly started spreading like wildfire. Numerous accounts flooded X with the deepfake material, so much so that searching for the term “Taylor Swift AI,” would serve the images and videos. In some regions, The Verge notes, that same hashtag was featured as a trending topic, which ultimately amplified the deepfakes further. One post in particular reportedly received 45 million views and 24,000 reposts before it was eventually removed. It took X 17 hours to remove the post despite it violating the company’s terms of service.

X did not immediately respond to PopSci’s request for comment.

Posting Non-Consensual Nudity (NCN) images is strictly prohibited on X and we have a zero-tolerance policy towards such content. Our teams are actively removing all identified images and taking appropriate actions against the accounts responsible for posting them. We’re closely…

— Safety (@Safety) January 26, 2024

With new iterations of the deepfakes proliferating, X moderators stepped in on Sunday and blocked search results for “Taylor Swift” and “Taylor…

Read the full article here