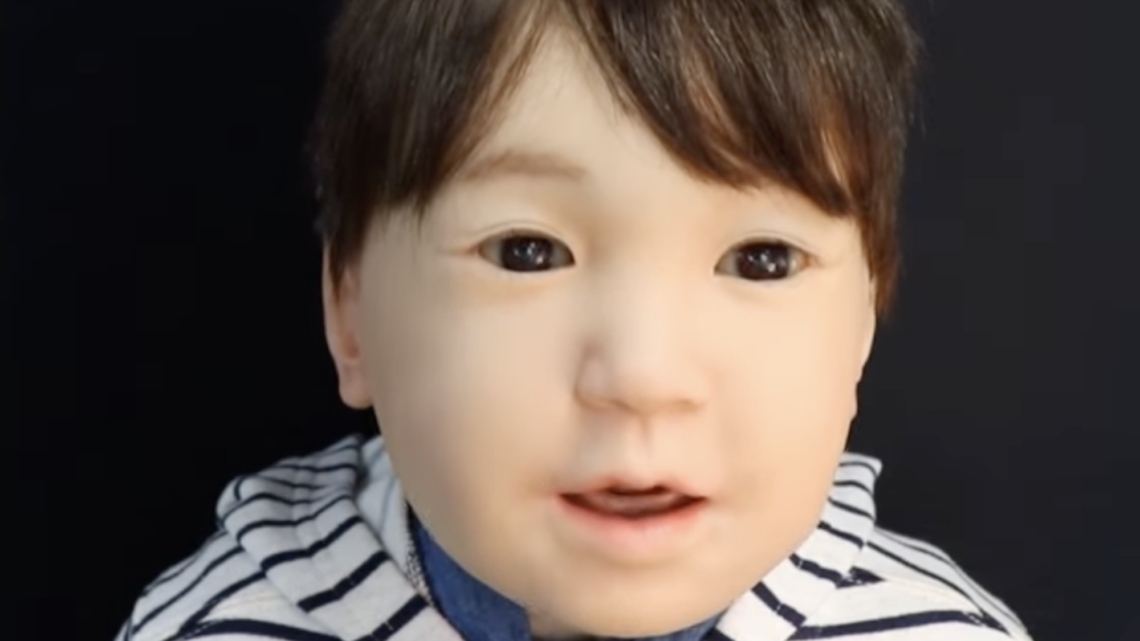

Bipedal robots (at least some of them) are becoming increasingly agile and humanlike in their movements. Despite this, one physical aspect remains stuck in the uncanny valley—realistic facial expressions. Robots still aren’t great at replicating complex and fluid face muscle interactions at speeds comparable to their biological inspirations. One solution, however, may be found by treating expressions as an interplay between various “waveforms.” The result is a new “dynamic arousal expression” system developed by researchers at Osaka University that allows a bot to mimic expressions more quickly and seamlessly than its predecessors.

The potential solution, detailed in a study published in the Journal of Robotics and Mechatronics, requires first classifying various facial gestures like yawning, blinking, and breathing as individual waveforms. These are then linked to amplitude of movements such as opening and closing lips, moving eyebrows, or angling the head. In this first case, a control parameter is used based on a mood spectrum ranging between “sleepy” and “excited.” These waves then propagate and superpose on top of each other to adjust a robot face’s physical features depending on their reaction. According to the study’s accompanying announcement, the new method rids programmers of the need to prepare individualized, choreographed facial movements for each response state.

“The automatic generation of dynamic facial expressions to transmit the internal states of a robot, such as mood, is crucial for communication robots,” the team writes in their study, who add that current methods rely on a “patchwork-like replaying of recorded motions” that make it difficult to achieve realistic results.

For example, a “sleepy” spectrum ranking generates certain…

Read the full article here