Hate speech, political propaganda and outright lies are hardly new problems online—even if election years such as this one exacerbate them. The use of bots, or automated social media accounts, has made it much easier to spread deliberately incorrect disinformation, as well as inaccurate rumors or other kinds of misinformation. But the bots that afflicted past voting seasons often churned out poorly constructed, grammatically incorrect sentences. Now as large language models (artificial intelligence systems that create text) become ever more accessible to more people, some researchers fear that automated social media accounts will soon get a lot more convincing.

Disinformation campaigns, online trolls and other “bad actors” are set to increasingly use generative AI to fuel election falsehoods, according to a new study published in PNAS Nexus. In it, researchers project that—based on “prior studies of cyber and automated algorithm attacks”—AI will help spread toxic content across social media platforms on a near-daily basis in 2024. The potential fallout, the study authors say, could affect election results in more than 50 countries holding elections this year, from India to the U.S.

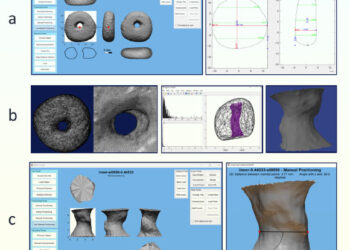

This research mapped the connections between bad actor groups across 23 online platforms that included Facebook and Twitter as well as niche communities on Discord and Gab, says the study’s lead author Neil Johnson, a physics professor at George Washington University. Extremist groups that post a lot of hate speech, the study found, tend to form and survive longer on smaller platforms—which generally have fewer resources for content moderation. But their messages can have a much wider reach.

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Many small…

Read the full article here