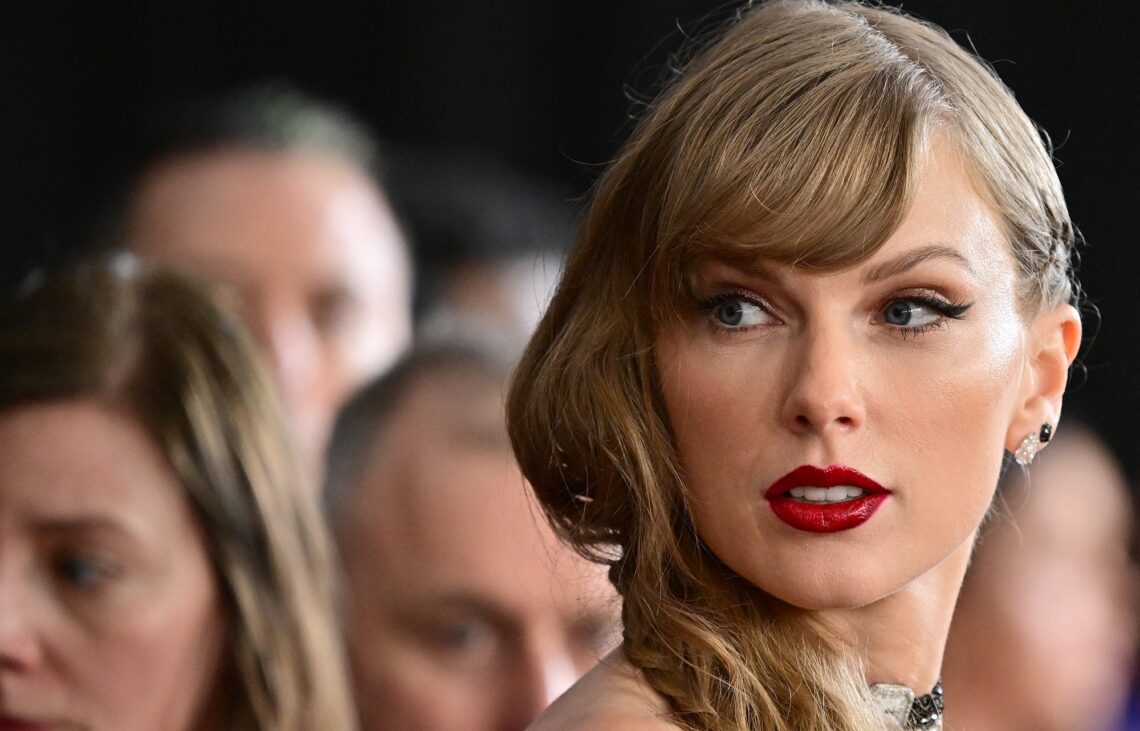

For Taylor Swift, the last few months of 2023 were triumphant. Her Eras Tour was named the highest-grossing concert tour of all time. She debuted an accompanying concert film that breathed new life into the genre. And to cap it off, Time magazine named her Person of the Year.

But in late January the megastar made headlines for a far less empowering reason: she had become the latest high-profile target of sexually explicit, nonconsensual deepfake images made using artificial intelligence. Swift’s fans were quick to report the violative content as it circulated on social media platforms, including X (formerly Twitter), which temporarily blocked searches of Swift’s name. It was hardly the first such case—women and girls across the globe have already faced similar abuse. Swift’s cachet helped propel the issue into the public eye, however, and the incident amplified calls for lawmakers to step in.

“We are too little, too late at this point, but we can still try to mitigate the disaster that’s emerging,” says Mary Anne Franks, a professor at George Washington University Law School and president of the Cyber Civil Rights Initiative. Women are “canaries in the coal mine” when it comes to the abuse of artificial intelligence, she adds. “It’s not just going to be the 14-year-old girl or Taylor Swift. It’s going to be politicians. It’s going to be world leaders. It’s going to be elections.”

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Swift, who recently became a billionaire, might be able to make some progress through individual litigation, Franks says. (Swift’s record label did not respond to a request for comment as to whether the artist will be pursuing lawsuits or supporting efforts to crack down on deepfakes.) Yet…

Read the full article here